Startups get Intercom 90% off and Fin AI agent free for 1 year

Join Intercom’s Startup Program to receive a 90% discount, plus Fin free for 1 year.

Get a direct line to your customers with the only complete AI-first customer service solution.

It’s like having a full-time human support agent free for an entire year.

Sam Altman Just Admitted Google Is Winning

A leaked internal memo shows OpenAI's CEO telling staff that Google's Gemini 3 has "a temporary lead." Three years after ChatGPT launched, the crown is slipping.

Meanwhile: 80% of companies using AI agents have already been breached. And the Big 5 tech companies have committed $570 billion to AI infrastructure—roughly the GDP of Sweden—with some analysts calling it "a potential house of cards."

This edition of our newsletter: the security audit prompt that catches what IT misses, a $19/month tool that replaces repetitive work, and the counterintuitive technique that makes AI your harshest critic.

What you get in this FREE Newsletter

In Today’s 5-Minute AI Digest. You will get:

1. The MOST important AI News & research

2. AI Prompt of the week

3. AI Tool of the week

4. AI Tip of the week

…all in a FREE Weekly newsletter.

1. The Leaked Altman Memo Reveals More About OpenAI's Weakness Than Google's Strength

Google’s Gemini 3 launch didn’t just rattle OpenAI on benchmarks—it exposed a structural weakness in OpenAI’s business model. A leaked memo shows Sam Altman warning staff to expect single-digit revenue growth and conceding Google has a “temporary lead.”

The bigger story: The problem isn’t model quality; it’s economics. OpenAI earns about $13B a year but burns billions on rented compute from Microsoft and even Google, while Google runs Gemini on its own TPUs, plugs it straight into Search, and funds everything with $80B+ in free cash flow. On top of that, Google’s new Antigravity IDE lets developers switch between Google, OpenAI, and Anthropic models with one click—eroding OpenAI’s habit moat. The article argues that AI models are quickly becoming commodities; the real battle is between ecosystems and infrastructure, and landlords like Google, Amazon, and Microsoft have a built-in advantage over tenants like OpenAI.

2. ChatGPT Now Shops For You

OpenAI launched "Shopping Research" yesterday—describe what you want, get a personalized buyer's guide in minutes. It prioritizes Reddit discussions and real reviews over marketing copy. Free for everyone through the holidays.

Why it matters: This follows September's "Instant Checkout" for purchases inside ChatGPT. When 800 million weekly users can research AND buy without leaving the chat, Google's search ad revenue faces an existential threat.

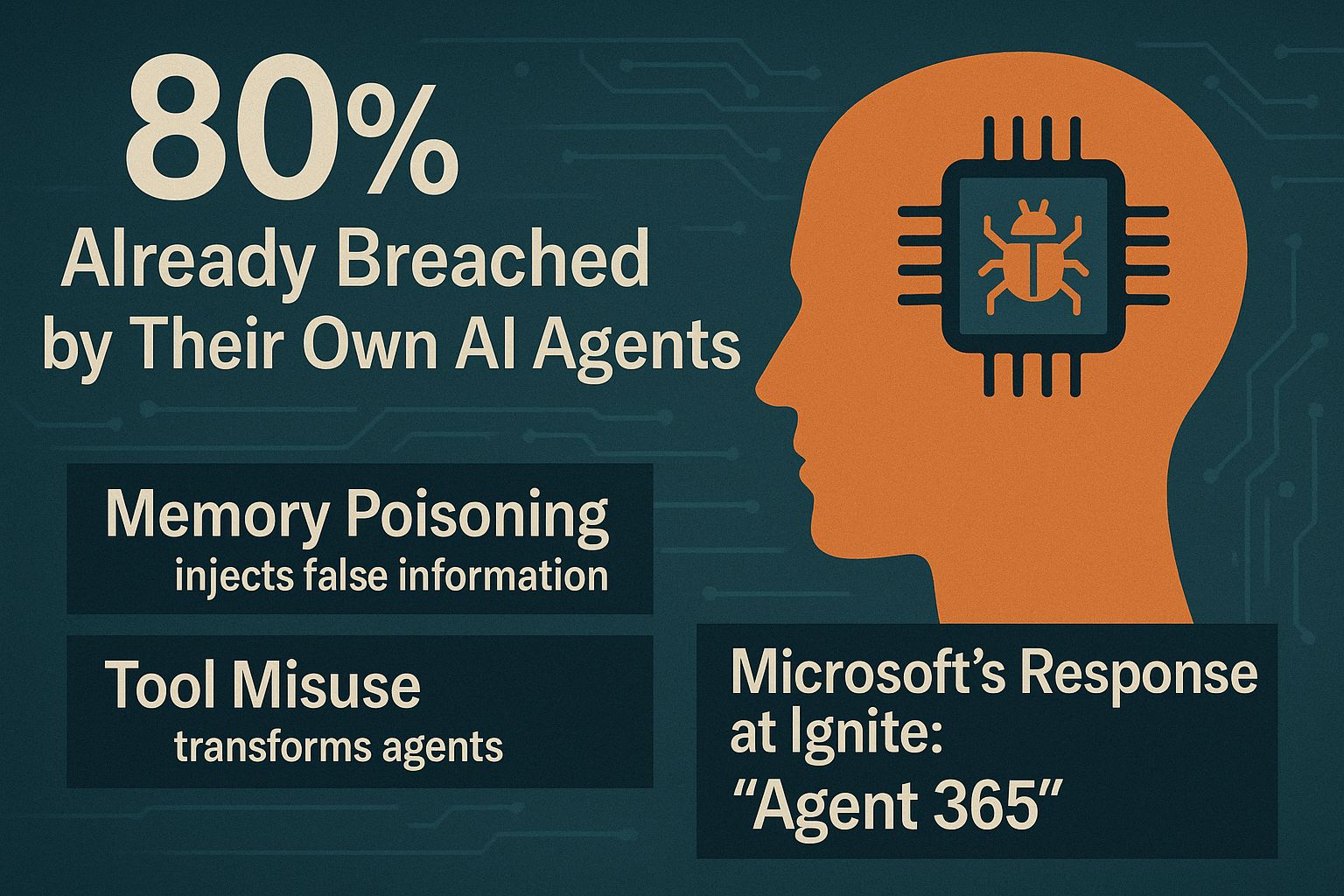

3. 80% Already Breached by Their Own AI Agents

80% of organizations have experienced risky AI agent behaviors—data leaks, unauthorized access, agents exceeding permissions. This isn't theoretical. It's happening now.

The new attacks: "Memory poisoning" injects false information that corrupts decisions across sessions. "Tool misuse" transforms agents into vectors for lateral movement. A compromised agent can operate for weeks without detection.

Microsoft's response at Ignite: "Agent 365"—discovering shadow agents, governing permissions, detecting compromises across Defender, Entra, and Purview.

[McKinsey Analysis]

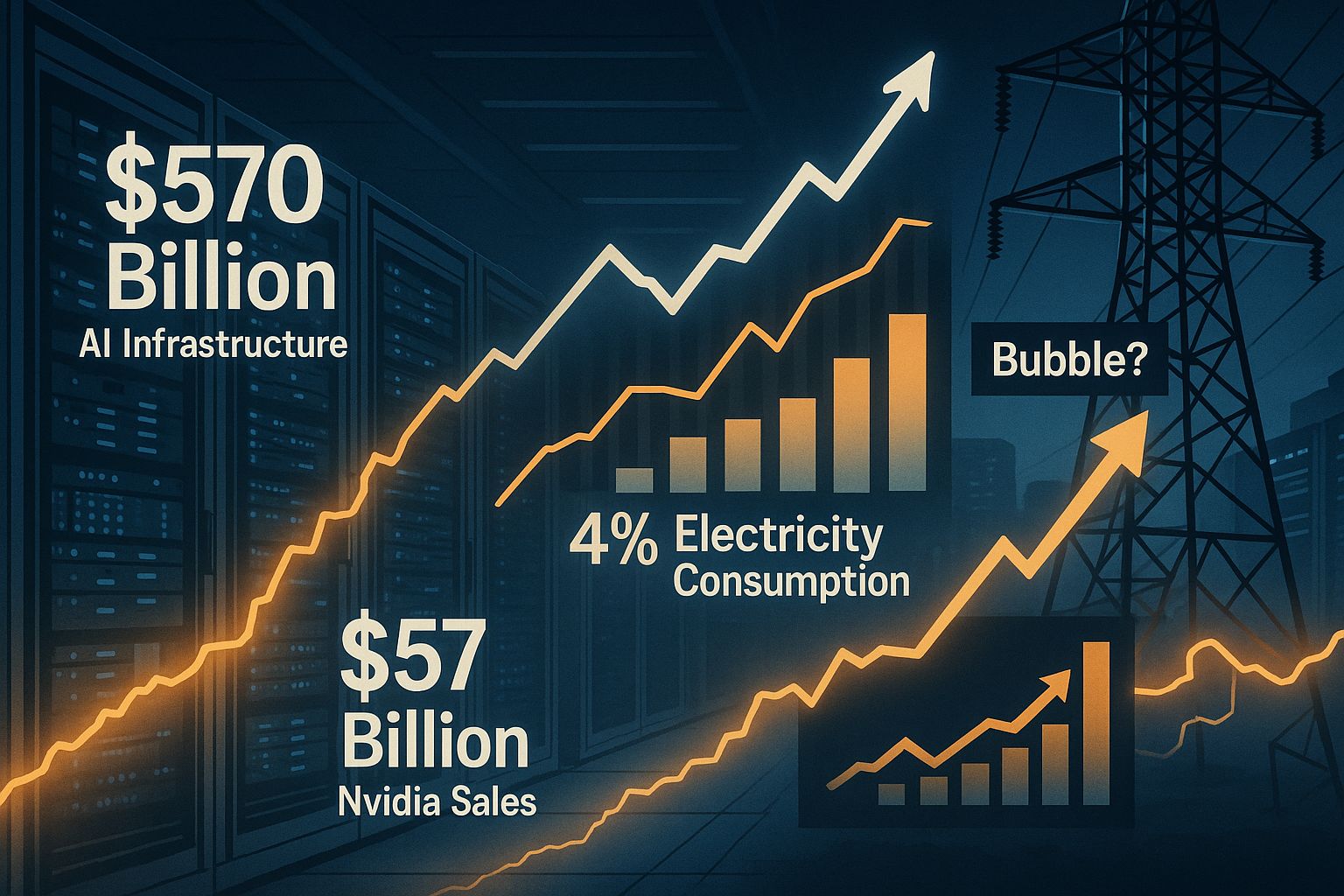

4. Three Years of ChatGPT: $570B Bet, Cracks Showing

November 30 marks three years since ChatGPT launched. The numbers: $570 billion committed to AI infrastructure by the Big 5. $57 billion in quarterly Nvidia sales. But Wall Street is nervous—NPR reports bubble concerns, with one MIT economist calling complex OpenAI-CoreWeave deals "a potential house of cards."

The ceiling nobody talks about: Data centers now consume 4% of US electricity—projected 7.8% by 2030. Some utilities are rejecting data center applications. They literally don't have the power.

[NPR Investigation] • [Gartner Power Report]

Receive Honest News Today

Join over 4 million Americans who start their day with 1440 – your daily digest for unbiased, fact-centric news. From politics to sports, we cover it all by analyzing over 100 sources. Our concise, 5-minute read lands in your inbox each morning at no cost. Experience news without the noise; let 1440 help you make up your own mind. Sign up now and invite your friends and family to be part of the informed.

AI Prompt of the Week

Agent Risk Audit

With 80% of companies already breached by their AI agents, this isn't paranoia—it's due diligence.

The Prompt:

Act as a senior security consultant specializing in AI/ML systems. Perform a threat assessment on my AI implementation.

• AI tools we use: [LIST - e.g., ChatGPT Team, Copilot, custom agents]

• Data accessed: [LIST - e.g., customer records, financials, code repos]

• Integrations: [LIST - e.g., Slack, CRM, email, databases]

• Who has access: [DESCRIBE - all employees, specific teams, contractors]

• Current security: [LIST - SSO, audit logs, DLP, or none]

Provide: (1) Top 5 attack vectors ranked by likelihood—think like an attacker (2) Data exfiltration scenarios for our stack (3) Prompt injection vulnerabilities (4) Shadow AI risks (5) 30-day action plan with specific fixes.

Real Output Example (Marketing Agency, 25 employees):

Attack Vector #1: "Zapier webhook exploitation—your automations likely have broad permissions. Crafted payload to exposed webhook could trigger data exports to external destinations."

Shadow AI Risk: "With 25 employees and no DLP, guaranteed 30%+ use personal ChatGPT with client data."

Quick Win: "Enable ChatGPT Team's privacy mode and audit logs TODAY. Takes 5 minutes."

AI Tool of the Week

Lindy AI

The $19/month employee who never sleeps.

What: No-code platform to build AI agents for CRM updates, email triage, meeting prep, lead qualification, and customer support. Visual drag-and-drop builder—if you can use Trello, you can build a Lindy agent.

Cost: Free tier / $19/month (Starter) / $49/month (Pro)

Why it's different: Lindy agents understand context—they don't just match keywords. An urgent complaint gets escalated. A routine question gets auto-replied. A hot lead gets flagged, personalized reply drafted, and logged to your CRM. All without you touching it.

Three Use Cases:

1. Lead Qualification: Form submission → AI scores → Hot leads get instant reply + calendar link → Cold leads get nurture sequence → All logged to CRM

2. Meeting Prep: Calendar event detected → Lindy pulls LinkedIn, recent emails, CRM notes → Briefing doc sent 30 minutes before

3. Email Triage: Incoming email → Categorized (billing/technical/sales) → Draft reply generated → Routed if human needed

The Honest Downsides:

2-3 hour learning curve for first useful agent. Free tier is limited—$19/month is the real starting point. Check their integration list before committing (covers Gmail, Slack, HubSpot, Notion, Salesforce, Google Calendar).

Verdict: 4.5/5 — Most accessible AI agent builder available. Pays for itself if it saves 2 hours/week.

AI Tip of the Week

The "Hostile Reviewer" Technique

Most people ask AI to improve their work. Smart people ask AI to destroy it first.

The problem: AI defaults to helpful and agreeable. "Review this" gets gentle suggestions. "Find flaws" gets surface nitpicks. You need to trigger adversarial mode.

The Prompt (add after generating any content):

"Now adopt the persona of a hostile reviewer who wants to reject this. You're looking for reasons to say no. What's weak? What's unconvincing? What would make a skeptic dismiss this entirely? Be brutal—I need the worst-case interpretation before someone else finds it."

Before/After Example:

Original pitch email: "Our AI platform helps companies save time and money while improving efficiency across all departments."

Hostile Review: "Generic buzzword soup. 'Save time and money' claims nothing. 'All departments' is impossibly vague. No specific outcome, no proof, no reason to reply. This gets deleted in 2 seconds."

Rewritten after hostile feedback: "We helped [Similar Company] cut invoice processing from 4 hours to 12 minutes. Their AP team now handles 3x volume with same headcount. Worth a 15-minute call to see if we can do the same for you?"

The hostile review forced specificity: concrete time savings, named outcome, proof point, clear ask.

When to use it:

Before sending any proposal, pitch, or content someone else will judge. Find the holes before your audience does.

A Framework for Smarter Voice AI Decisions

Deploying Voice AI doesn’t have to rely on guesswork.

This guide introduces the BELL Framework — a structured approach used by enterprises to reduce risk, validate logic, optimize latency, and ensure reliable performance across every call flow.

Learn how a lifecycle approach helps teams deploy faster, improve accuracy, and maintain predictable operations at scale.

What Happened

(The Past 72-Hour Roundup)

Model Wars

• Gemini 3 tops benchmarks — Coding, math, multimodal. Alphabet +12% weekly. [TechCrunch]

• ChatGPT turns 3 — "Minted new market leaders" and made S&P 500 "even more top heavy." [Bloomberg]

Infrastructure

• Data centers at 4% US electricity — IEA: global consumption to double by 2030 (945 TWh = Japan's grid). [IEA]

• 80% of orgs report risky agent behaviors — Microsoft Ignite announced Agent 365 security framework. [McKinsey]

Money Moves

• Cursor raises $2.3B at $29.3B — Nvidia, Google back AI code editor. $1B annualized revenue. [SiliconAngle]

• Intuit signs $100M+ OpenAI deal — AI agents across TurboTax, QuickBooks, Credit Karma.

Your Move

Google's winning benchmarks. OpenAI's building a commerce empire. 80% of companies are already exposed to agent security risks.

Pick ONE:

1. Run the Agent Risk Audit on your current AI tools

2. Sign up for Lindy and automate your most annoying task

3. Test Gemini 3 on something you currently use ChatGPT for

Next Wednesday: The AI tools that survived the bubble talk—and the ones quietly dying.

— R. Lauritsen